Generative AI

Learn about integrating the power of generative AI in your apps and services. Use state-of-the-art models for text generation, audio synthesis, and more to create innovative experiences.

What is Generative AI?

Generative AI refers to artificial intelligence that creates new content—such as text, images, audio, or code—based on patterns learned from existing data. Generative AI leverages transformer models for text and diffusion models for images. These innovations are transforming industries, enabling personalized experiences, automating creative processes, and opening new possibilities for content generation!

Generative AI Models

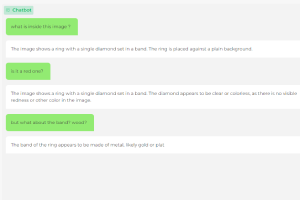

Text Generation Models

Generate human-like text for chatbots, content creation, summarization, and more.

Image Generation Models

Create artwork or realistic images from descriptions using AI models like Stable Diffusion.

Audio Models

Generate audio, music, or speech from data inputs with AI models like Whisper.

Other Models

Generate diverse outputs like code, video, or 3D designs.

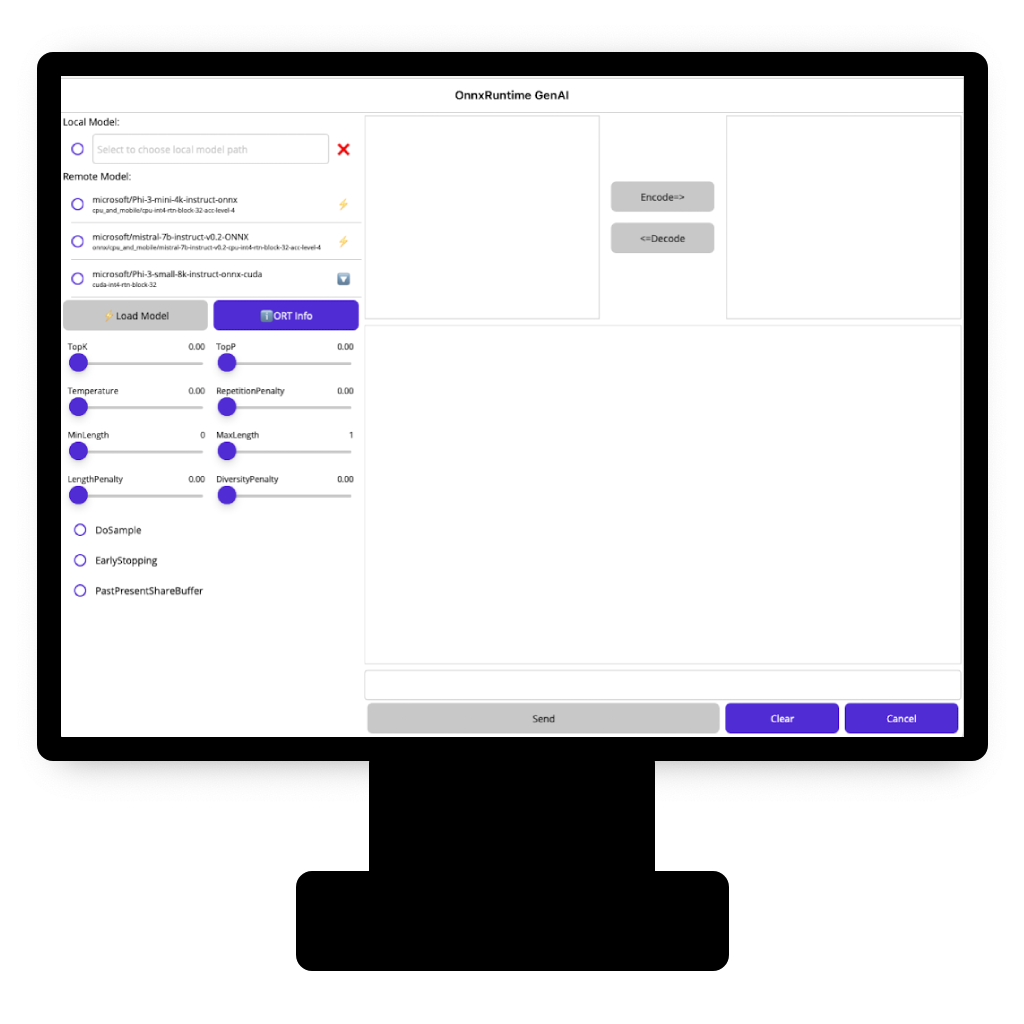

ONNX Runtime ❤️ Generative AI

Use ONNX Runtime for high performance, scalability, and flexibility when deploying generative AI models. With support for diverse frameworks and hardware acceleration, ONNX Runtime ensures efficient, cost-effective model inference across platforms.

Run ONNX Runtime on:

Desktop

Multiplatform

Run ONNX Runtime on Desktop 🖥️, Mobile 📱, Browser 💻, or Cloud ☁️.

On Device

Inference privately 🔐 and save costs ⚙️ with on-device models.

Multimodal Compatibility

Use ONNX Runtime with vision or omni models. We work to quickly enable all new model scenarios 🚀.

Easy to Use

Get started quickly ⏩ with our examples and tutorials.

Tutorials & Demos

Get started with any of these tutorials and demos:

Olive Examples

Use Olive, a hardware-aware optimizer, to quickly generate the ideal ONNX model for your needs.

Try it out!